AI

AI

Sat Jan 09, 2016 4:16 pm

CS and AI

1.0 AI

AI Fack

Q. What is artificial intelligence?

A. It is the Art of making intelligent machines.

Q. And what is intelligence?

A. It is the computational part of one's ability to promote his self-interest.

Q. Is intelligence a single thing so that one can ask a yes or no question ``Is this machine intelligent or not?''?

A. No. Intelligence involves mechanisms, and AI research has discovered how to make computers carry out some of them and not others.

If doing a task requires only mechanisms that are well understood today, computer programs can give very impressive performances on these tasks.

1.0 AI

AI Fack

Q. What is artificial intelligence?

A. It is the Art of making intelligent machines.

Q. And what is intelligence?

A. It is the computational part of one's ability to promote his self-interest.

Q. Is intelligence a single thing so that one can ask a yes or no question ``Is this machine intelligent or not?''?

A. No. Intelligence involves mechanisms, and AI research has discovered how to make computers carry out some of them and not others.

If doing a task requires only mechanisms that are well understood today, computer programs can give very impressive performances on these tasks.

A.I. Arxiv | Minksy , Feynman Lectures , Warren Mcculloch

A.I. Arxiv | Minksy , Feynman Lectures , Warren Mcculloch

Tue Jan 12, 2016 1:37 am

AI

papers :

papers :

http://arxiv.org/list/cs.AI/recent

----------------------------------

Old and new Links

Feynman, R. - Lectures on Computation

Feynman, R. - Lectures on Computation

Marvin Minsky's Home Page - MIT Media Lab

John McCarthy's Home Page

----------------------------------

The McCulloch paper.

The McCulloch paper.

A logical calculus of the ideas immanent in nervous activity

A logical calculus of the ideas immanent in nervous activity

McCulloch-Pitts 1943 / > Von Neumann.

1. Compute a weighted Sum of the Inputs.

2. SEND OUT a fixed size spike of activity if the weighted Sum exceeds a threshold.

papers :

papers :http://arxiv.org/list/cs.AI/recent

----------------------------------

Old and new Links

Feynman, R. - Lectures on Computation

Feynman, R. - Lectures on ComputationMarvin Minsky's Home Page - MIT Media Lab

John McCarthy's Home Page

----------------------------------

The McCulloch paper.

The McCulloch paper. A logical calculus of the ideas immanent in nervous activity

A logical calculus of the ideas immanent in nervous activityMcCulloch-Pitts 1943 / > Von Neumann.

1. Compute a weighted Sum of the Inputs.

2. SEND OUT a fixed size spike of activity if the weighted Sum exceeds a threshold.

Manhattan GandhiLeveL V

Manhattan GandhiLeveL V

- Posts : 565

Join date : 2016-01-09

Age : 47

Location : R'Lyeh

Why People Think Computers Can'T , Marvin Minsky

Why People Think Computers Can'T , Marvin Minsky

Mon Jul 04, 2016 2:55 pm

WHY PEOPLE THINK COMPUTERS CAN'T

Marvin Minsky, MIT

Most people think computers will never be able to think. That is, really

think. Not now or ever. To be sure, most people also agree that computers

can do many things that a person would have to be thinking to do. Then

how could a machine seem to think but not actually think? Well, setting

aside the question of what thinking actually is, I think that most of us

would answer that by saying that in these cases, what the computer is

doing is merely a superficial imitation of human intelligence. It has been

designed to obey certain simple commands, and then it has been provided

with programs composed of those commands. Because of this, the

computer has to obey those commands, but without any idea of what's

happening.

Indeed, when computers first appeared, most of their designers intended

them for nothing only to do huge, mindless computations. That's why the

things were called "computers". Yet even then, a few pioneers --

especially Alan Turing -- envisioned what's now called "Artificial

Intelligence" - or "AI". They saw that computers might possibly go

beyond arithmetic, and maybe imitate the processes that go on inside

human brains.

Today, with robots everywhere in industry and movie films, most people

think Al has gone much further than it has. Yet still, "computer experts"

say machines will never really think. If so, how could they be so smart,

and yet so dumb?

CAN COMPUTERS UNDERSTAND?

Can we make computers understand what we tell them? In 1965, Daniel

Bobrow wrote one of the first Rule-Based Expert Systems. It was called

"STUDENT" and it was able to solve a variety of high-school algebra "word

problems"., like these:

The distance from New York to Los Angeles is 3000 miles. If the

average speed of a jet plane is 600 miles per hour, find the time it

takes to travel from New York to Los Angeles by jet.

Bill's father's uncle is twice as old as Bill's father. Two years from

now I Bill's father will be three times as old as Bill. The sum of their

ages is 92.

Find Bill's age.

Most students find these problems much harder than just solving the

formal equations of high school algebra. That's just cook-book stuff -- but

to solve the informal word problems, you have to figure out what

equations to solve and, to do that, you must understand what the words and

sentences mean. Did STUDENT understand? It used a lot of tricks. It was

programmed to guess that "is" usually means "equals". It didn't even try to

figure out what "Bill's fathers' uncle" means -- it only noticed that this

phrase resembles "Bill's father". It didn't know that "age" and "old" refer

to time, but it took them to represent numbers to be put in equations. With

a couple of hundred such word-trick-facts, STUDENT sometimes managed

to get the right answers.

Then dare we say that STUDENT "understands" those words? Why bother.

Why fall into the trap of feeling that we must define old words like

"mean" and "understand"? It's great when words help us get good ideas,

but not when they confuse us. The question should be: does STUDENT avoid

the "real meanings" by using tricks?

Or is it that what we call meanings really are just clever bags of tricks.

Let's take a classic thought-example, such as what a number means.

STUDENT obviously knows some arithmetic, in the sense that it can find

such sums as "5 plus 7 is 12". But does it understand numbers in any other

sense - say, what 5 "is" - or, for that matter, what are "plus" or "is"? What

would say if I asked you, "What is Five"? Early in this century, the

philosophers Bertrand Russell and Alfred North Whitehead proposed a

new way to define numbers. "Five", they said, is "the set of all possible sets

with five members". This set includes each set of five ball-point pens, and

every litter of five kittens. Unhappily, it also includes such sets as "the

Five things you'd least expect" and "the five smallest numbers not

included in this set" -- and these lead to bizarre inconsistencies and

paradoxes. The basic goal was to find perfect definitions for ordinary

words and ideas. But even to make the idea work for Mathematics, getting

around these inconsistencies made the Russell-Whitehead theory too

complicated for practical, common sense, use. Educators once actually

tried to make children use this theory of sets, in the "New Mathematics"

movement of the 1960's; it only further set apart those who liked

mathematics from those who dreaded it. I think the trouble was, it tried to

get around a basic fact of mind: what something means to me depends to

some extent on many other things I know.

What if we built machines that weren't based on rigid definitions? Wont

they just drown in paradox, equivocation, inconsistency? Relax! Most of

what we people "know" already overflows with contradictions; still we

survive. The best we can do is be reasonably careful; let's just make our

machines that careful, too. If there remain some chances of mistake, well,

that's just life.

If every meaning in a mind depends on other meanings in that mind,

does that make things too ill-defined to make a scientific project work?

No, even when thing go in circles, there still are scientific things to do!

Just make new kinds of theories - about those circles themselves! The

older theories only tried to hide the circularities. But that lost all the

richness of our wondrous human meaning-webs; the networks in our

human minds are probably more complex than any other structure

Science ever contemplated in the past. Accordingly, the detailed theories

of Artificial Intelligence will probably need, eventually, some very

complicated theories. But that's life, too.

Let's go back to what numbers mean. This time, to make things easier,

well think about Three. I'm arguing that Three, for us, has no one single,

basic definition, but is a web of different processes that each get meaning

from the others. Consider all the roles "Three" plays. One way we tell a

Three is to recite "One, Two, Three", while pointing to the different

things. To do it right, of course, you have to (i) touch each thing once and

(ii) not touch any twice. One way to count out loud while you pick up each

object and remove it. Children learn to do such things in their heads or,

when that's too hard, to use tricks like finger-pointing. Another way to

tell a Three is to use some Standard Set of Three things. Then bring set of

things to the other set, and match them I one-to-one: if all are matched

and none are left, then there were Three. That "standard I Three" need

not be things, for words like "one, two, three" work just as well. For Five

we have a wider choice. One can think of it as groups of Two and Three, or

One and Four. Or, one can think of some familiar shapes -. a pentagon, an

X, a Vee, a cross, an aeroplane; they all make Fives.

o o o o o o o o

o o o o o o o o o o o

o o o o o o

I think it's bad psychology, when teachers shape our children's

mathematics into long, thin, fragile, definition tower-chains, instead of

robust cross-connected webs. Those chains break at their weakest links,

those towers topple at the slightest shove. And that's what happens to a

child's mind in mathematics class, who only takes a moment just to watch

a pretty cloud go by. The purposes of ordinary people are not the same as

those of mathematicians and philosophers, who want to simplify by

having just as few connections as can be. In real life, the best ideas are

cross-connected as can be. Perhaps that's why our culture makes most

children so afraid of mathematics. We think we help them get things

right, by making things go wrong most times! Perhaps, instead, we ought

to help them build more robust networks in their heads.

ARE HUMANS SELF-AWARE?

Most people assume that computers can't be conscious, or self-aware; at

best they can only simulate the appearance of this. Of course, this

assumes that we, as humans, are self-aware. But are we? I think not. I

know that sounds ridiculous, so let me explain.

If by awareness we mean knowing what is in our minds, then, as every

clinical psychologist knows, people are only very slightly self-aware, and

most of what they think about themselves is guess-work. We seem to build

up networks of theories about what is in our minds, and we mistake these

apparent visions for what's really going on. To put it bluntly, most of

what our "consciousness" reveals to us is just "made up". Now, I don't

mean that we're not aware of sounds and sights, or even of some parts of

thoughts. I'm only saying that we're not aware of much of what goes on

inside our minds.

When people talk, the physics is quite clear: our voices shake the air; this

makes your ear-drums move -- and then computers in your head convert

those waves into constituents of words. These somehow then turn into

strings of symbols representing words, so now there's somewhere in your

head that "represents" a sentence. What happens next?

When light excites your retinas, this causes events in your brain that

correspond to texture, edges, color patches, and the like. Then these, in

turn, are somehow fused to "represent" a shape or outline of a thing.

What happens then?

It is too easy to say things like, "Computer can't do (xxx), because they

have no feelings, or thoughts". But here's a way to turn such sayings into

foolishness. Change them to read like this. "Computer can't do (xxx),

because all they can do is execute incredibly intricate processes, perhaps

millions at a time". Now, such objections seem less convincing -- yet all

we did was face one simple, complicated fact: we really don't yet know

what the limits of computers are. Now let's face the other simple fact: our

notions of the human mind are just as primitive.

Why are we so reluctant to admit how little is known about how the mind

works? Perhaps

we fear that too much questioning might tear the veils that clothe our

mental lives.

To me there is a special irony when people say machines cannot have

minds, because I feel we're only now beginning to see how minds

possibly could work -- using insights that came directly from attempts to

see what complicated machines can do. Of course we're nowhere near a

clear and complete theory - yet. But in retrospect, it now seems strange

that anyone could ever hope to understand such things before they knew

much more about machines. Except, of course, if they believed that minds

are not complex at all.

A 1961

program written by James Slagle could solve calculus problems at the

level of college students; it even got an A on an MIT exam. But it wasn't till

around 1970 that we managed to construct a robot programs that could see

and move well enough to handle ordinary things like children's building

blocks and do things like stack them up, take them down, rearrange them,

and put them in boxes.

Why could we make programs do those grown-up things before we could

make them do those childish things? The answer is a somewhat

unexpected paradox: much "expert" adult thinking is basically much

simpler than what happens in a child's ordinary play! It can be harder to

be a novice than to be an expert! This is because, sometimes, what an

expert needs to know and do can be quite simple -- only, it may be very

hard to discover, or learn, in the first place. Thus, Galileo had to be smart

indeed, to see the need for calculus. He didn't manage to invent it. Yet any

good student can learn it today.

I'll bet that when we try to make machines more sensible, we'll find that

learning what is wrong turns out to be as important as learning what's

correct. In order to succeed, it helps to know the likely ways to fail. Freud

talked about censors in our minds, that keep us from forbidden acts or

thoughts.

This idea is not popular in contemporary psychology, perhaps because

censors only suppress behavior, so their activity is invisible on the

surface. When a person makes a good decision, we tend to ask what "line

of thought" lies behind it. But we don't so often ask what thousand

prohibitions might have warded off a thousand bad alternatives. If

censors work inside our minds, to keep us from mistakes and absurdities,

why can't we feel that happening? Because, I suppose, so many thousands

of them work at once that, if you had to think about them, you'd never get

much done. They have to ward off bad ideas before you "get" those bad

ideas.

Perhaps this is one reason why so much of human thought is

"unconscious". Each idea that we have time to contemplate must be a

product of many events that happen deeper and earlier in the mind. Each

conscious thought must be the end of processes in which it must compete

with other proto-thoughts, perhaps by pleading little briefs in little

courts. But all that we do sense of that are just the final sentences.

Then, is it possible to program a computer to be self-conscious? People

usually expect the answer to be "no". What if we answered that machines

are capable, in principle, of even more and better consciousness than

people have?

I think this could be done by providing machines with ways to examine

their own mechanisms while they are working. In principle, at least, this

seem possible; we already have some simple Al programs that can

understand a little about how some simpler programs work. (There is a

technical problem about the program being fast enough, to keep up with

itself, but that can be solved by keeping records.) The trouble is, we still

know far too little, yet, to make programs with enough common sense to

understand even how today's simple Al problem-solving programs work.

But once we learn to make machines that are smart enough to understand

such things, I see no special problem in giving them the "self-insight"

they would need to understand, change, and improve themselves.

It will be a long time before we learn enough about common sense

reasoning to make machines as smart as people are. Today, we already

know quite a lot about making useful, specialized, "expert" systems. We

still don't know how to make them able to improve themselves in

interesting ways.

Just as Evolution changed man's view of Life, Al will change mind's view

of Mind. As we find more ways to make machines behave more sensibly,

we'll also learn more about our mental processes. In its course, we will

find new ways to think about "thinking" and about "feeling". Our view of

them will change from opaque mysteries to complex yet still

comprehensible webs of ways to represent and use ideas. Then those

ideas, in turn, will lead to new machines, and those, in turn, will give us

new ideas. No one can tell where that will lead and only one thing's sure

right now: there's something wrong with any claim to know, today, of

any basic differences between the minds of men and those of possible

machines.

First published in AI Magazine, vol. 3 no. 4, Fall 1982.

Marvin Minsky, MIT

Most people think computers will never be able to think. That is, really

think. Not now or ever. To be sure, most people also agree that computers

can do many things that a person would have to be thinking to do. Then

how could a machine seem to think but not actually think? Well, setting

aside the question of what thinking actually is, I think that most of us

would answer that by saying that in these cases, what the computer is

doing is merely a superficial imitation of human intelligence. It has been

designed to obey certain simple commands, and then it has been provided

with programs composed of those commands. Because of this, the

computer has to obey those commands, but without any idea of what's

happening.

Indeed, when computers first appeared, most of their designers intended

them for nothing only to do huge, mindless computations. That's why the

things were called "computers". Yet even then, a few pioneers --

especially Alan Turing -- envisioned what's now called "Artificial

Intelligence" - or "AI". They saw that computers might possibly go

beyond arithmetic, and maybe imitate the processes that go on inside

human brains.

Today, with robots everywhere in industry and movie films, most people

think Al has gone much further than it has. Yet still, "computer experts"

say machines will never really think. If so, how could they be so smart,

and yet so dumb?

CAN COMPUTERS UNDERSTAND?

Can we make computers understand what we tell them? In 1965, Daniel

Bobrow wrote one of the first Rule-Based Expert Systems. It was called

"STUDENT" and it was able to solve a variety of high-school algebra "word

problems"., like these:

The distance from New York to Los Angeles is 3000 miles. If the

average speed of a jet plane is 600 miles per hour, find the time it

takes to travel from New York to Los Angeles by jet.

Bill's father's uncle is twice as old as Bill's father. Two years from

now I Bill's father will be three times as old as Bill. The sum of their

ages is 92.

Find Bill's age.

Most students find these problems much harder than just solving the

formal equations of high school algebra. That's just cook-book stuff -- but

to solve the informal word problems, you have to figure out what

equations to solve and, to do that, you must understand what the words and

sentences mean. Did STUDENT understand? It used a lot of tricks. It was

programmed to guess that "is" usually means "equals". It didn't even try to

figure out what "Bill's fathers' uncle" means -- it only noticed that this

phrase resembles "Bill's father". It didn't know that "age" and "old" refer

to time, but it took them to represent numbers to be put in equations. With

a couple of hundred such word-trick-facts, STUDENT sometimes managed

to get the right answers.

Then dare we say that STUDENT "understands" those words? Why bother.

Why fall into the trap of feeling that we must define old words like

"mean" and "understand"? It's great when words help us get good ideas,

but not when they confuse us. The question should be: does STUDENT avoid

the "real meanings" by using tricks?

Or is it that what we call meanings really are just clever bags of tricks.

Let's take a classic thought-example, such as what a number means.

STUDENT obviously knows some arithmetic, in the sense that it can find

such sums as "5 plus 7 is 12". But does it understand numbers in any other

sense - say, what 5 "is" - or, for that matter, what are "plus" or "is"? What

would say if I asked you, "What is Five"? Early in this century, the

philosophers Bertrand Russell and Alfred North Whitehead proposed a

new way to define numbers. "Five", they said, is "the set of all possible sets

with five members". This set includes each set of five ball-point pens, and

every litter of five kittens. Unhappily, it also includes such sets as "the

Five things you'd least expect" and "the five smallest numbers not

included in this set" -- and these lead to bizarre inconsistencies and

paradoxes. The basic goal was to find perfect definitions for ordinary

words and ideas. But even to make the idea work for Mathematics, getting

around these inconsistencies made the Russell-Whitehead theory too

complicated for practical, common sense, use. Educators once actually

tried to make children use this theory of sets, in the "New Mathematics"

movement of the 1960's; it only further set apart those who liked

mathematics from those who dreaded it. I think the trouble was, it tried to

get around a basic fact of mind: what something means to me depends to

some extent on many other things I know.

What if we built machines that weren't based on rigid definitions? Wont

they just drown in paradox, equivocation, inconsistency? Relax! Most of

what we people "know" already overflows with contradictions; still we

survive. The best we can do is be reasonably careful; let's just make our

machines that careful, too. If there remain some chances of mistake, well,

that's just life.

If every meaning in a mind depends on other meanings in that mind,

does that make things too ill-defined to make a scientific project work?

No, even when thing go in circles, there still are scientific things to do!

Just make new kinds of theories - about those circles themselves! The

older theories only tried to hide the circularities. But that lost all the

richness of our wondrous human meaning-webs; the networks in our

human minds are probably more complex than any other structure

Science ever contemplated in the past. Accordingly, the detailed theories

of Artificial Intelligence will probably need, eventually, some very

complicated theories. But that's life, too.

Let's go back to what numbers mean. This time, to make things easier,

well think about Three. I'm arguing that Three, for us, has no one single,

basic definition, but is a web of different processes that each get meaning

from the others. Consider all the roles "Three" plays. One way we tell a

Three is to recite "One, Two, Three", while pointing to the different

things. To do it right, of course, you have to (i) touch each thing once and

(ii) not touch any twice. One way to count out loud while you pick up each

object and remove it. Children learn to do such things in their heads or,

when that's too hard, to use tricks like finger-pointing. Another way to

tell a Three is to use some Standard Set of Three things. Then bring set of

things to the other set, and match them I one-to-one: if all are matched

and none are left, then there were Three. That "standard I Three" need

not be things, for words like "one, two, three" work just as well. For Five

we have a wider choice. One can think of it as groups of Two and Three, or

One and Four. Or, one can think of some familiar shapes -. a pentagon, an

X, a Vee, a cross, an aeroplane; they all make Fives.

o o o o o o o o

o o o o o o o o o o o

o o o o o o

I think it's bad psychology, when teachers shape our children's

mathematics into long, thin, fragile, definition tower-chains, instead of

robust cross-connected webs. Those chains break at their weakest links,

those towers topple at the slightest shove. And that's what happens to a

child's mind in mathematics class, who only takes a moment just to watch

a pretty cloud go by. The purposes of ordinary people are not the same as

those of mathematicians and philosophers, who want to simplify by

having just as few connections as can be. In real life, the best ideas are

cross-connected as can be. Perhaps that's why our culture makes most

children so afraid of mathematics. We think we help them get things

right, by making things go wrong most times! Perhaps, instead, we ought

to help them build more robust networks in their heads.

ARE HUMANS SELF-AWARE?

Most people assume that computers can't be conscious, or self-aware; at

best they can only simulate the appearance of this. Of course, this

assumes that we, as humans, are self-aware. But are we? I think not. I

know that sounds ridiculous, so let me explain.

If by awareness we mean knowing what is in our minds, then, as every

clinical psychologist knows, people are only very slightly self-aware, and

most of what they think about themselves is guess-work. We seem to build

up networks of theories about what is in our minds, and we mistake these

apparent visions for what's really going on. To put it bluntly, most of

what our "consciousness" reveals to us is just "made up". Now, I don't

mean that we're not aware of sounds and sights, or even of some parts of

thoughts. I'm only saying that we're not aware of much of what goes on

inside our minds.

When people talk, the physics is quite clear: our voices shake the air; this

makes your ear-drums move -- and then computers in your head convert

those waves into constituents of words. These somehow then turn into

strings of symbols representing words, so now there's somewhere in your

head that "represents" a sentence. What happens next?

When light excites your retinas, this causes events in your brain that

correspond to texture, edges, color patches, and the like. Then these, in

turn, are somehow fused to "represent" a shape or outline of a thing.

What happens then?

It is too easy to say things like, "Computer can't do (xxx), because they

have no feelings, or thoughts". But here's a way to turn such sayings into

foolishness. Change them to read like this. "Computer can't do (xxx),

because all they can do is execute incredibly intricate processes, perhaps

millions at a time". Now, such objections seem less convincing -- yet all

we did was face one simple, complicated fact: we really don't yet know

what the limits of computers are. Now let's face the other simple fact: our

notions of the human mind are just as primitive.

Why are we so reluctant to admit how little is known about how the mind

works? Perhaps

we fear that too much questioning might tear the veils that clothe our

mental lives.

To me there is a special irony when people say machines cannot have

minds, because I feel we're only now beginning to see how minds

possibly could work -- using insights that came directly from attempts to

see what complicated machines can do. Of course we're nowhere near a

clear and complete theory - yet. But in retrospect, it now seems strange

that anyone could ever hope to understand such things before they knew

much more about machines. Except, of course, if they believed that minds

are not complex at all.

A 1961

program written by James Slagle could solve calculus problems at the

level of college students; it even got an A on an MIT exam. But it wasn't till

around 1970 that we managed to construct a robot programs that could see

and move well enough to handle ordinary things like children's building

blocks and do things like stack them up, take them down, rearrange them,

and put them in boxes.

Why could we make programs do those grown-up things before we could

make them do those childish things? The answer is a somewhat

unexpected paradox: much "expert" adult thinking is basically much

simpler than what happens in a child's ordinary play! It can be harder to

be a novice than to be an expert! This is because, sometimes, what an

expert needs to know and do can be quite simple -- only, it may be very

hard to discover, or learn, in the first place. Thus, Galileo had to be smart

indeed, to see the need for calculus. He didn't manage to invent it. Yet any

good student can learn it today.

I'll bet that when we try to make machines more sensible, we'll find that

learning what is wrong turns out to be as important as learning what's

correct. In order to succeed, it helps to know the likely ways to fail. Freud

talked about censors in our minds, that keep us from forbidden acts or

thoughts.

This idea is not popular in contemporary psychology, perhaps because

censors only suppress behavior, so their activity is invisible on the

surface. When a person makes a good decision, we tend to ask what "line

of thought" lies behind it. But we don't so often ask what thousand

prohibitions might have warded off a thousand bad alternatives. If

censors work inside our minds, to keep us from mistakes and absurdities,

why can't we feel that happening? Because, I suppose, so many thousands

of them work at once that, if you had to think about them, you'd never get

much done. They have to ward off bad ideas before you "get" those bad

ideas.

Perhaps this is one reason why so much of human thought is

"unconscious". Each idea that we have time to contemplate must be a

product of many events that happen deeper and earlier in the mind. Each

conscious thought must be the end of processes in which it must compete

with other proto-thoughts, perhaps by pleading little briefs in little

courts. But all that we do sense of that are just the final sentences.

Then, is it possible to program a computer to be self-conscious? People

usually expect the answer to be "no". What if we answered that machines

are capable, in principle, of even more and better consciousness than

people have?

I think this could be done by providing machines with ways to examine

their own mechanisms while they are working. In principle, at least, this

seem possible; we already have some simple Al programs that can

understand a little about how some simpler programs work. (There is a

technical problem about the program being fast enough, to keep up with

itself, but that can be solved by keeping records.) The trouble is, we still

know far too little, yet, to make programs with enough common sense to

understand even how today's simple Al problem-solving programs work.

But once we learn to make machines that are smart enough to understand

such things, I see no special problem in giving them the "self-insight"

they would need to understand, change, and improve themselves.

It will be a long time before we learn enough about common sense

reasoning to make machines as smart as people are. Today, we already

know quite a lot about making useful, specialized, "expert" systems. We

still don't know how to make them able to improve themselves in

interesting ways.

Just as Evolution changed man's view of Life, Al will change mind's view

of Mind. As we find more ways to make machines behave more sensibly,

we'll also learn more about our mental processes. In its course, we will

find new ways to think about "thinking" and about "feeling". Our view of

them will change from opaque mysteries to complex yet still

comprehensible webs of ways to represent and use ideas. Then those

ideas, in turn, will lead to new machines, and those, in turn, will give us

new ideas. No one can tell where that will lead and only one thing's sure

right now: there's something wrong with any claim to know, today, of

any basic differences between the minds of men and those of possible

machines.

First published in AI Magazine, vol. 3 no. 4, Fall 1982.

Manhattan GandhiLeveL V

Manhattan GandhiLeveL V

- Posts : 565

Join date : 2016-01-09

Age : 47

Location : R'Lyeh

Deep Neural Nets , introduction

Deep Neural Nets , introduction

Fri Oct 20, 2017 2:30 am

Manhattan GandhiLeveL V

Manhattan GandhiLeveL V

- Posts : 565

Join date : 2016-01-09

Age : 47

Location : R'Lyeh

G. E. Hinton deep learning Neural Nets

G. E. Hinton deep learning Neural Nets

Tue Mar 13, 2018 3:00 pm

Manhattan GandhiLeveL V

Manhattan GandhiLeveL V

- Posts : 565

Join date : 2016-01-09

Age : 47

Location : R'Lyeh

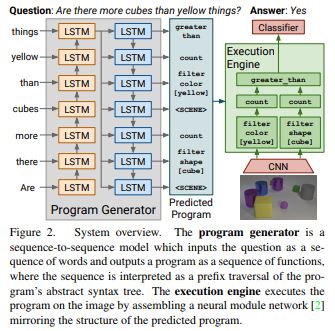

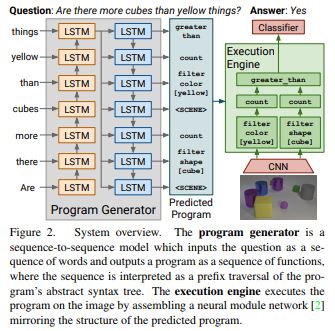

J.Johnson Visual Reasoning

J.Johnson Visual Reasoning

Tue Mar 13, 2018 7:00 pm

Inferring and Executing Programs for Visual Reasoning

[Johnson ArxiV.03633]

https://arxiv.org/pdf/1705.03633.pdf

https://arxiv.org/pdf/1705.03633.pdf

[Johnson ArxiV.03633]

https://arxiv.org/pdf/1705.03633.pdf

https://arxiv.org/pdf/1705.03633.pdf

Manhattan GandhiLeveL V

Manhattan GandhiLeveL V

- Posts : 565

Join date : 2016-01-09

Age : 47

Location : R'Lyeh

Generative Adversarial Nets

Generative Adversarial Nets

Tue Mar 13, 2018 7:00 pm

Manhattan GandhiLeveL V

Manhattan GandhiLeveL V

- Posts : 565

Join date : 2016-01-09

Age : 47

Location : R'Lyeh

Unsupervised machine learning

Unsupervised machine learning

Tue Mar 13, 2018 7:00 pm

Manhattan GandhiLeveL V

Manhattan GandhiLeveL V

- Posts : 565

Join date : 2016-01-09

Age : 47

Location : R'Lyeh

Robert Kwiatkowski Agnostic Self Modeling

Robert Kwiatkowski Agnostic Self Modeling

Mon Sep 09, 2019 1:30 am

https://www.youtube.com/watch?v=FHisJi3ZBeo

Robert Kwiatkowski is a phd student at Columbia University’s Creative Machines Lab,

This robot arm is free to move around and collect information in relation to itself.

So it learns what happens to itself when it moves.

“The robot knows what happens to the arm when it does something.”

And can learn things despite beginning with literally no assumptions about the world.

“The robot at the start had absolutely no information about anything.

“It had no assumptions about physics, body structure, nothing.

“And the only information it receives is sensory information from the world.

Mr Kwiatkowski said: “We are trying to move away from a paradigm where programmers tell the robots

who they are and how they work. We are moving towards a paradigm where robots figure out what they

are and how they work, so we can streamline their efficiency and allow them a little bit of autonomy.

the self-awareness was engineered using deep learning.]

Robert Kwiatkowski is a phd student at Columbia University’s Creative Machines Lab,

This robot arm is free to move around and collect information in relation to itself.

So it learns what happens to itself when it moves.

“The robot knows what happens to the arm when it does something.”

And can learn things despite beginning with literally no assumptions about the world.

“The robot at the start had absolutely no information about anything.

“It had no assumptions about physics, body structure, nothing.

“And the only information it receives is sensory information from the world.

Mr Kwiatkowski said: “We are trying to move away from a paradigm where programmers tell the robots

who they are and how they work. We are moving towards a paradigm where robots figure out what they

are and how they work, so we can streamline their efficiency and allow them a little bit of autonomy.

the self-awareness was engineered using deep learning.]

DelearthLeveL V

DelearthLeveL V

- Posts : 541

Join date : 2016-01-06

Age : 47

Location : Antarctica

Re: AI

Re: AI

Mon Sep 09, 2019 1:30 am

Aaron Sloman: “I for one, do not think defining consciousness is important at all, and I believe that it diverts attention from important and difficult problems. The whole idea is based on a fundamental misconception that just because there is a noun "consciousness" there is some ‘thing’ like magnetism or electricity or pressure or temperature, and that it's worth looking for correlates of that thing. Or on the misconception that it is worth trying to prove that certain mechanisms can or cannot produce ‘it’, or trying to find out how ‘it’ evolved, or trying to find out which animals have ‘it’, or trying to decide at which moment ‘it’ starts when a fetus develops, or at which moment ‘it’ stops when brain death occurs, etc. There will not be one thing to be correlated but a very large collection of very different things.

I completely agree with Sloman’s view. To understand how our thinking works, we must study those “very different things” and then ask what kinds of machinery could accomplish some or all of them. In other words, we must try to design—as opposed to define—machines that can do what our minds can do.

I completely agree with Sloman’s view. To understand how our thinking works, we must study those “very different things” and then ask what kinds of machinery could accomplish some or all of them. In other words, we must try to design—as opposed to define—machines that can do what our minds can do.

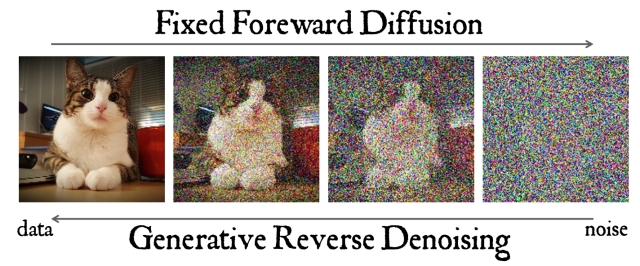

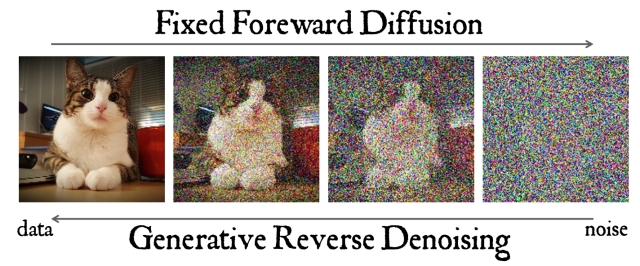

Diffusion models

Diffusion models

Tue Jun 28, 2022 12:00 am

Diffusion models are inspired by non-equilibrium thermodynamics. They define a Markov chain of diffusion steps to distort the training data by adding Gaussian noise. This progressively removes details in the data till it becomes pure noise. Then the neural network learns to reverse the diffusion process to create new samples

from the noise.

https://lilianweng.github.io/posts/2021-07-11-diffusion-models/

2 text-to-image Generatorz.

https://arxiv.org/abs/2204.06125

https://arxiv.org/abs/2204.06125

https://arxiv.org/abs/2205.11487

https://arxiv.org/abs/2205.11487

https://openai.com/dall-e-2/

https://openai.com/dall-e-2/

https://www.youtube.com/watch?v=NYGdO5E_5oY

https://www.youtube.com/watch?v=NYGdO5E_5oY

from the noise.

https://lilianweng.github.io/posts/2021-07-11-diffusion-models/

2 text-to-image Generatorz.

https://arxiv.org/abs/2204.06125

https://arxiv.org/abs/2204.06125 https://arxiv.org/abs/2205.11487

https://arxiv.org/abs/2205.11487 https://openai.com/dall-e-2/

https://openai.com/dall-e-2/ https://www.youtube.com/watch?v=NYGdO5E_5oY

https://www.youtube.com/watch?v=NYGdO5E_5oYPermissions in this forum:

You can reply to topics in this forum|

|

|

Home

Home PortaL

PortaL